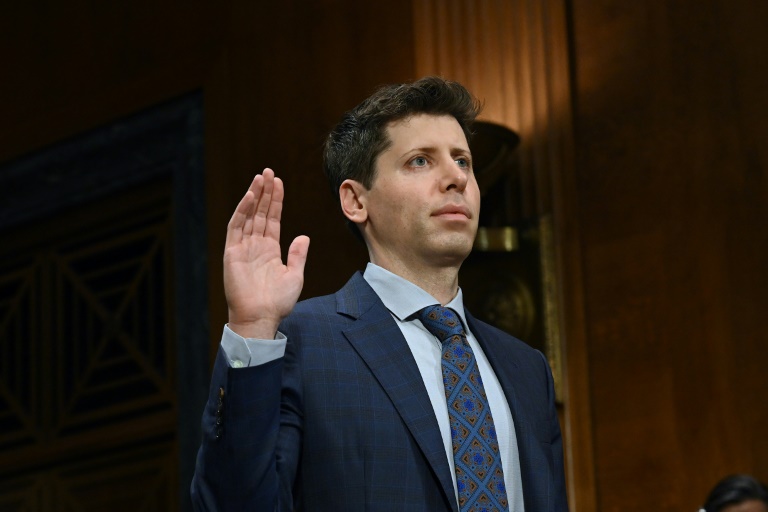

Sam Altman, the chief executive of ChatGPT’s OpenAI, told US lawmakers on Tuesday that regulating artificial intelligence was essential, after his chatbot stunned the world.

The lawmakers stressed their deepest fears of AI’s developments, with a leading senator opening the hearing on Capitol Hill with a computer-made voice, sounding remarkably similar to his own, reading a text generated by the bot.

“If you were listening from home, you might have thought that voice was mine and the words from me, but in fact, that voice was not mine,” said Senator Richard Blumenthal.

Artificial intelligence technologies “are more than just research experiments. They are no longer fantasies of science fiction, they are real and present,” Blumenthal said.

The latest figure to erupt from Silicon Valley, Altman testified before a US Senate subcommittee and urged Congress to impose new rules on big tech, despite deep political divisions that for years have blocked legislation aimed at regulating the internet.

But governments worldwide are under pressure to move quickly after the release of ChatGPT, a bot that can churn out human-like content in an instant, went viral and both wowed and spooked users.

Altman has since become the global face of AI as he both pushes out his company’s technology, including to Microsoft and scores of companies, and warns that the work could have nefarious effects on society.

“OpenAI was founded on the belief that artificial intelligence has the potential to improve nearly every aspect of our lives, but also that it creates serious risks,” Altman told a Senate judiciary subcommittee hearing.

He insisted that in time, generative AI developed by OpenAI will “address some of humanity’s biggest challenges, like climate change and curing cancer.”

However, given the risk to disinformation, jobs and other problems, “we think that regulatory intervention by governments will be critical to mitigate the risks of increasingly powerful models,” he said.

– Go ‘global’ –

Altman suggested the US government might consider a combination of licensing and testing requirements before the release of powerful AI models, with a power to revoke permits if rules were broken.

He also recommended labeling and increased global coordination in setting up rules over the technology as well as the creation of a dedicated US agency to handle artificial intelligence.

“I think the US should lead here and do things first, but to be effective we do need something global,” he added.

Senator Blumenthal underlined that Europe had already advanced considerably with its AI Act that is set to go to a vote next month at the European Parliament.

A sprawling legislative text, the EU measure could see bans on biometric surveillance, emotion recognition and certain policing AI systems.

Crucially for OpenAI, US lawmakers underlined that it also seeks to put generative AI systems such as ChatGPT and DALL-E in a category requiring special transparency measures, such as notifications to users that the output was made by a machine.

OpenAI’s DALL-E last year sparked an online rush to create lookalike Van Goghs and has made it extremely easy to generate illustrations and graphics with a simple request.

Lawmakers also heard warnings that the technology was still in its early stages.

“There are more genies yet to come for more bottles,” said New York University professor emeritus Gary Marcus, another panelist.

“We don’t have machines that can really… improve themselves. We don’t have machines that have self awareness and we might not ever want to go there,” he said.

Christina Montgomery, chief privacy and trust officer at IBM, urged lawmakers against being too broad stroked in setting up rules on AI.

“A chatbot that can share restaurant recommendations or draft an email has different impacts on society than a system that supports decisions on credit, housing, or employment,” she said.

Business4 months ago

Business4 months ago

Business4 months ago

Business4 months ago

Events6 months ago

Events6 months ago

People4 months ago

People4 months ago

Events3 months ago

Events3 months ago